In many machine learning problems, we do not have enough labeled data to train a deep neural network from scratch. Training a deep model requires large datasets, high computational cost, and long training times. To solve this problem, we can use transfer learning.

When a deep neural network is trained, its first layers learn very basic things like edges, lines, colors, shapes, and simple patterns. These basic visual elements appear in almost all images, no matter the task. Because of that, this knowledge can be useful for many different problems.

So instead of training a completely new model, we keep those early layers and use them to help with a new task. This makes training faster, easier, and requires less data.

Instead of training the whole network again, we keep the layers that have already learned useful information and do not change them. This is called freezing the layers.

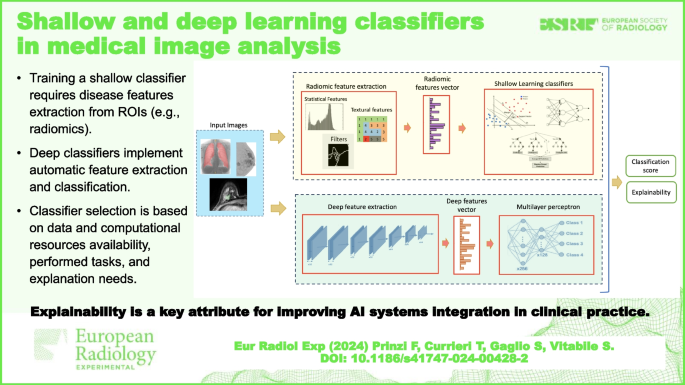

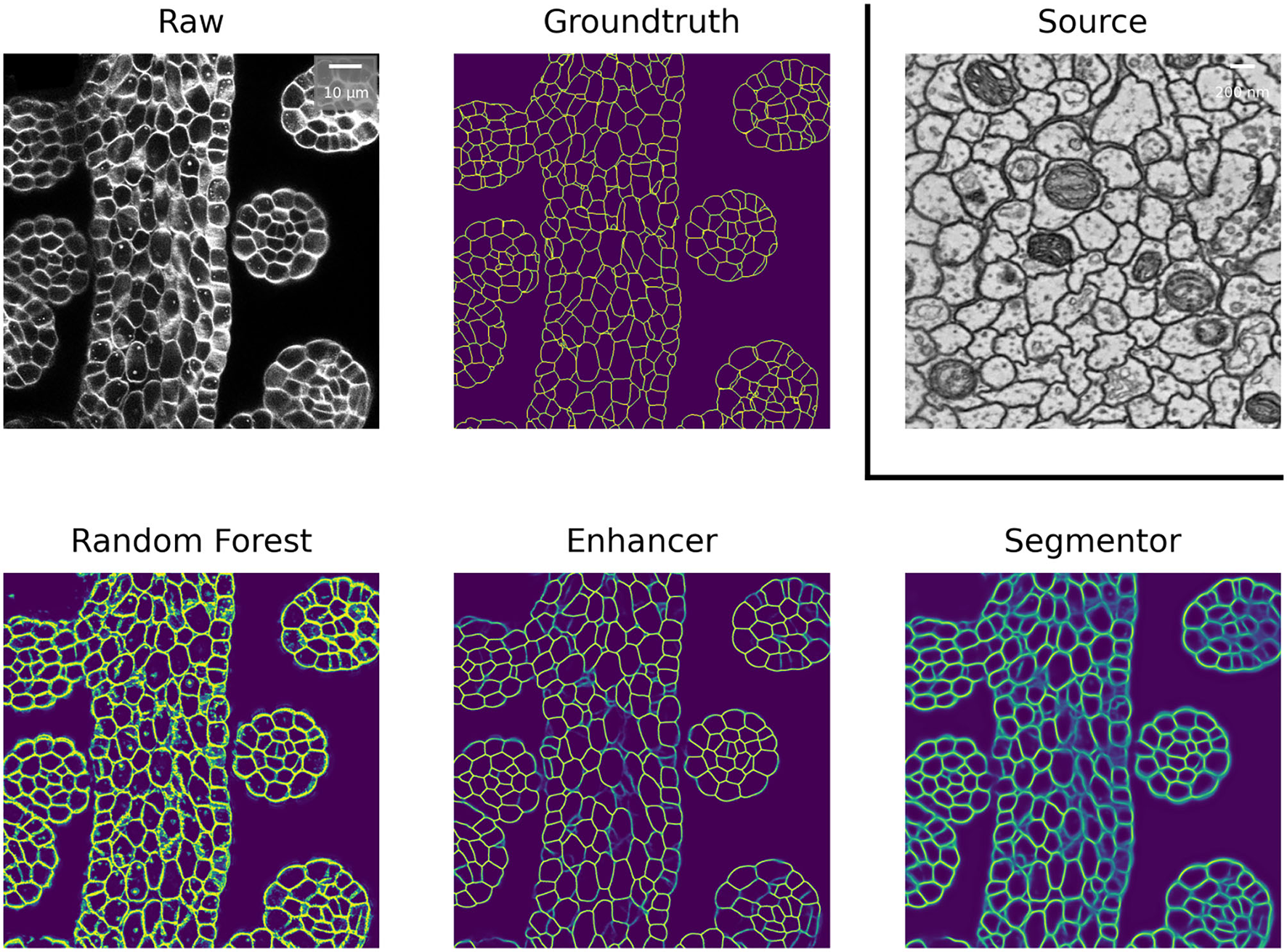

We use these layers to extract important information from the images. The information they produce is called deep features. These features help the new model understand the data better.

On top of these extracted features, we train a shallow classifier. A shallow classifier is a simple machine learning model, such as Logistic Regression or a Support Vector Machine (SVM), that does not have many layers. Unlike deep networks, shallow models are easier and faster to train.

This approach is known as off-the-shelf transfer learning.

For more information about Transfer Learning, you can see:

TensorFlow – Transfer Learning Guide

PyTorch – Transfer Learning Tutorial

-

Feature extractor

-

Replace classifier

-

Freeze backbone

Machine Learning Mastery – Transfer Learning

CS231n – Stanford (Transfer Learning)

Shallow and deep learning classifiers in medical image analysis

©Image. https://link.springer.com/

©Image. https://www.frontiersin.org/

@Yolanda Muriel

@Yolanda Muriel